We live in a golden age of scientific data, with larger stockpiles of genetic information, medical images and astronomical observations than ever before. Artificial intelligence can pore over these troves to uncover potential new scientific discoveries much quicker than people ever could. But we should not blindly trust AI’s scientific insights, argues data scientist Genevera Allen, until these computer programs can better gauge how certain they are in their own results.

AI systems that use machine learning — programs that learn what to do by studying data rather than following explicit instructions — can be entrusted with some decisions, says Allen, of Rice University in Houston. Namely, AI is reliable for making decisions in areas where humans can easily check their work, like counting craters on the moon or predicting earthquake aftershocks.

But more exploratory algorithms that poke around large datasets to identify previously unknown patterns or relationships between various features “are very hard to verify,” Allen said February 15 at a news conference at the annual meeting of the American Association for the Advancement of Science. Deferring judgment to such autonomous, data-probing systems may lead to faulty conclusions, she warned.

Take precision medicine, where researchers often aim to find groups of patients that are genetically similar to help tailor treatments. AI programs that sift through genetic data have successfully identified patient groups for some diseases, like breast cancer. But it hasn’t worked as well for many other conditions, like colorectal cancer. Algorithms examining different datasets have clustered together different, conflicting patient classifications. That leaves scientists to wonder which, if any, AI to trust.

These contradictions arise because data-mining algorithms are designed to follow a programmer’s exact instructions with no room for indecision, Allen explained. “If you tell a clustering algorithm, ‘Find groups in my dataset,’ it comes back and it says, ‘I found some groups.’ ” Tell it to find three groups, and it finds three. Request four, and it will give you four.

What AI should really do, Allen said, is report something like, “I really think that these groups of patients are really, really grouped similarly … but these others over here, I’m less certain about.”

Scientists are no strangers to dealing with uncertainty. But traditional uncertainty-measuring techniques are designed for cases where a scientist has analyzed data that was specifically collected to evaluate a predetermined hypothesis. That’s not how data-mining AI programs generally work. These systems have no guiding hypotheses, and they muddle through massive datasets that are generally collected for no single purpose. Researchers like Allen, however, are designing protocols to help next-generation AI estimate the accuracy and reproducibility of its discoveries.

One of these techniques relies on the idea that if an AI program has made a real discovery — like identifying a set of clinically meaningful patient groups — then that finding should hold up in other datasets. It’s generally too expensive for scientists to collect brand new, huge datasets to test what an AI has found. But, Allen said, “we can take the current data that we have, and we can perturb the data and randomize the data in a way that mimics [collecting] future datasets.” If the AI finds the same types of patient classifications over and over, for example, “you probably have a pretty good discovery on your hands,” she said.

Source:

https://www.sciencenews.org/article/data-scientist-warns-against-trusting-ai-scientific-discoveries

Scheduled Server Maintenance and System Downtime Notice Dec 16, 2025

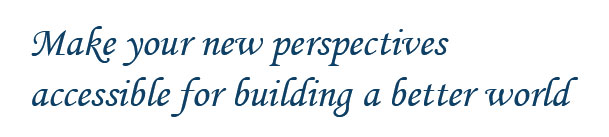

Celebrating CM Editorial Board Members Recognized in the Wor... Oct 10, 2025

Food Science and Engineering Now Indexed in CAS Database Aug 20, 2025

Contemporary Mathematics Achieves Significant Milestone in 2... Jun 19, 2025

Three Journals under Universal Wiser Publisher are Newly Ind... Apr 21, 2025