When robots and humans interact in a shared environment, it is important for them to move in ways that prevent collisions or accidents. To reduce the risk of collisions, roboticists have developed numerous of techniques that monitor an environment, predict the future actions of humans moving in it, identify safe trajectories for a robot and control its movements accordingly.

Predicting human behavior and anticipating a human user's movements, however, can be incredibly challenging. Determining the future movements of a robot, on the other hand, could far easier, as robots are generally programmed to complete specific goals or perform particular actions. If a human user could anticipate the movements of a robot and the effects these will have on the surrounding environment, he/she should then be able to easily adapt his/her actions in order to avoid accidents or collisions.

With this in mind, researchers at Kyushu University in Japan recently created a system that allows human users to forecast future changes in their environment, which could then inform their decisions and guide their actions. This system, presented in a paper published in Advanced Robotics, compiles a dataset containing information about the position of furniture, objects, humans and robots within the same environment, to then produce simulations of events that could take place in the near future. These simulations are presented to human users via Virtual Reality (VR) or Augmented Reality (AR) headsets.

"In order to ensure that a user perceives future events naturally, we developed a near-future perception system named Previewed Reality," the researchers wrote in their paper. "Previewed Reality consists of an informationally structured environment, a VR display or an AR display and a dynamics simulator."

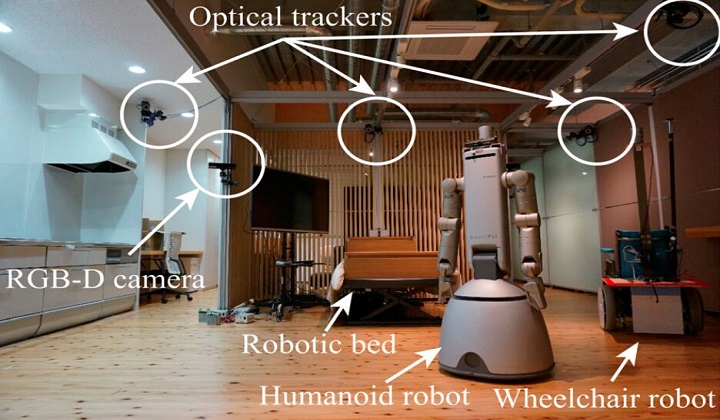

To collect information about the position of different objects, robots and humans in a shared environment, the researchers used a number of strategically placed sensors, including optical trackers and an RGB-D camera. The optical trackers monitored the movements of objects or robots, while the RGB-D camera mainly tracked human actions.

The data gathered by the sensors was then fed to a motion planner and a dynamics simulator. Combined, these two system components allowed the researchers to forecast changes in a given environment and synthesize images of events that are likely to occur in the near future, from the viewpoint of a specific human.

Human users could then view these synthesized images simply by wearing a VR headset or an AR display. On the headset or display, the images were layed over those of a user's actual surroundings, in order to clearly illustrate the changes that could take place in the future.

"The viewpoint of the user, which is the position and orientation of a VR display or AR display, is also tracked by an optical tracking system in the informationally structured environment, or the SLAM technique on an AR display," the researchers explained in their paper. "This system provides human-friendly communication between a human and a robotic system, and a human and a robot can coexist safely by intuitively showing the human possible hazardous situations in advance."

Previewed Reality could serve both as an alternative and as a supplement to more conventional collision avoidance systems, which are designed to identify safe trajectories for robots. In the future, the system could be used to enhance the safety of interactions between humans and robots in a variety of indoor environments. In their next studies, the researchers plan to expand and simplify the perception system they developed further, for instance by creating a lighter and more affordable version that can be accessed on smart phones or other portable devices.

Source:

https://techxplore.com/news/2020-11-previewed-reality-users-future-environment.html

《Sustainable and Clean Buildings》A Newly Established Journal Apr 07, 2024

Congratulations to Food Science and Engineering 1st Online E... Dec 13, 2023

Congratulations on FCE 1st Online Editorial Board Meeting, H... Dec 11, 2023

International Conference on Climate Change, Ecosystems, and ... Sep 12, 2023

Contemporary Mathematics Received its First Impact Factor an... Jun 29, 2023